Ethical Considerations and Future Directions

Ethical Implications of AI in Drug Discovery

The integration of artificial intelligence (AI) into drug development presents a complex web of ethical considerations. One crucial area is data privacy and security. AI algorithms often rely on vast datasets of patient information, potentially exposing individuals to breaches and misuse. Robust data governance and stringent security protocols are paramount to ensure patient confidentiality and prevent exploitation. Furthermore, the potential for bias in AI models trained on historical data needs careful attention. This bias could lead to the development of treatments that disproportionately benefit certain populations while neglecting others. Rigorous testing and evaluation, alongside diverse datasets, are essential for mitigating these risks and promoting equitable access to new therapies.

Another critical ethical consideration revolves around the accountability of AI systems in drug development. As AI takes on more responsibility in the drug discovery process, defining clear lines of accountability becomes increasingly vital. Determining who is responsible for errors or misinterpretations arising from AI-driven decisions is essential to maintain transparency and trust in the process. Furthermore, the potential for job displacement due to AI-driven automation needs careful consideration and proactive strategies for workforce retraining and adaptation. Open discussions and collaborations between researchers, policymakers, and industry stakeholders are needed to address these issues proactively.

Addressing Data Bias and Ensuring Fairness

Ensuring the fairness and equitable distribution of AI-driven drug development efforts is paramount. One critical step is to actively identify and mitigate biases in the training data used to train AI algorithms. Diverse and representative datasets should be prioritized to avoid perpetuating existing societal biases in the design and development of new treatments. Researchers and developers must also be mindful of potential biases in the design of the AI models themselves, constantly evaluating the algorithms for potential discriminatory outcomes.

Furthermore, ongoing monitoring and evaluation are essential to ensure that AI systems are not inadvertently reinforcing existing health disparities. Regular audits and assessments of the models' performance, along with input from diverse stakeholders, can help identify and address potential biases proactively. This approach also necessitates ongoing dialogue about the ethical implications of AI and its potential impact on different populations to ensure fair and equitable access to the benefits of AI-powered drug discovery.

Potential Impacts on Clinical Trials and Regulatory Processes

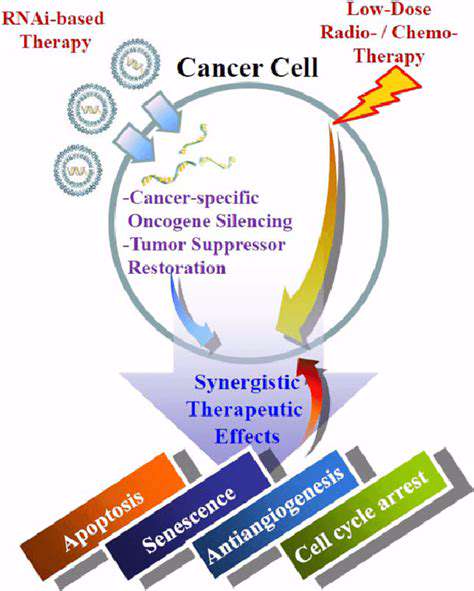

AI's increasing role in drug development is poised to reshape clinical trials and regulatory processes. AI-powered tools can accelerate the identification of promising drug candidates, potentially reducing the time and cost associated with traditional trial methods. However, the validity and reliability of AI-generated results need careful validation to ensure the safety and efficacy of new treatments. This necessitates robust pre-clinical testing and rigorous clinical trial designs to confirm the findings of AI-driven analyses.

Furthermore, AI can streamline regulatory submissions by providing comprehensive data summaries and insights. However, adapting regulatory frameworks to accommodate AI-derived evidence requires careful consideration. Clear guidelines and standards are needed to ensure that AI-driven results meet the same standards of rigor and transparency as those generated through traditional methods. This includes establishing clear criteria for the validation and verification of AI-generated data within the regulatory approval process.

The Future of Human-AI Collaboration in Drug Discovery

The future of AI in drug development likely lies in human-AI collaboration, where the strengths of both approaches are leveraged. AI can augment human capabilities by automating tasks, identifying patterns, and generating hypotheses. However, it is essential to maintain human oversight and judgment in decision-making processes, particularly in areas such as interpreting complex biological data and evaluating the potential risks of new treatments. This collaborative approach will be crucial in ensuring that AI-driven discoveries align with ethical considerations and societal values.

Furthermore, ongoing research and development in AI are crucial to addressing the limitations and challenges of current approaches. Continuous improvement in AI algorithms, coupled with rigorous human oversight, will likely lead to more reliable and effective drug discovery strategies. This collaboration between humans and AI promises to accelerate the pace of innovation while maintaining the critical safeguards necessary for responsible and ethical development of new medicines.

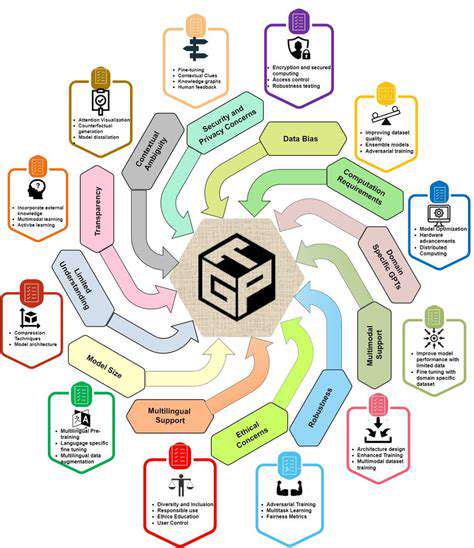

Ensuring Transparency and Explainability in AI Models

Achieving transparency and explainability in AI models is essential for building trust and ensuring accountability in drug development. Understanding how AI arrives at its conclusions is vital for assessing the validity and reliability of its predictions. The ability to explain the reasoning behind an AI model's predictions allows researchers to identify potential biases, validate findings, and ensure that the results align with scientific understanding. This is particularly important when evaluating the safety and efficacy of new treatments.

Creating clear and accessible explanations for AI-generated insights is critical for fostering public trust and engagement. Furthermore, promoting open access to AI models and algorithms allows for peer review and validation, which can help identify and address potential flaws or biases. Openness and transparency are crucial for ensuring that AI-driven breakthroughs in drug development benefit all of society, rather than just a select few.